CHAPTER 6

Section I

Laboratory Preliminaries

The analysis of metals in the parts per billion or parts per trillion concentrations is a difficult and tedious process that requires optimum laboratory conditions. Many laboratories even have their sample handling and preparation sections of the lab physically separate from their instrument rooms in order to avoid contaminating instruments. Error associated with laboratory work can be divided into three general categories: sampling, sample preparation, and analysis. Entire books and statistics courses have been dedicated to proper sampling design and implementation in order to avoid errors (referred to as bias) in the collection of samples, such as in the proper sampling of lake water. Laboratory error has been significantly reduced by standardizing sample extraction procedures for a particular sample, element, compound, industry, and even a specific technician.

This beginning section of Chapter 6 is dedicated to a few of the more important factors associated with the instrumental analysis of samples. Before actual experimental procedures and results are presented, it is necessary to comment on sample preparation, special chemicals, and the calibration of an instrument. These topics are not normally covered in textbooks but are important for first-time instrument users. The types of samples that are commonly analyzed by FAAS and ICP will be discussed, including proper procedures that are critical to avoid common laboratory errors.

I.6.1 Types of Samples and Sample Preparation

There are various sample types that need to be analyzed for their metal concentration. For example, a geochemist may be interested in determining the concentration of a particular metal, such as lead, in a terrestrial or oceanic rock or a fisheries biologist may be interested in determining the concentration of mercury in a particular fish species. However, the various instruments that could perform this task at a trace level (ppm or lower) are unable to accommodate a rock or fish matrix. As a result, sample preparation, specifically sample digestion, is needed before the instrument can be utilized. The chemical process to transform the solid sample into an aqueous sample must be undertaken carefully with the proper reagents and glassware. After the concentration of the digested sample is determined, simple calculations can be performed to accurately determine the concentrations of lead in a rock or mercury in a fish. If sample preparation is not performed properly, no degree of instrumental components or laboratory technique can correct for a sampling/digestion error. Despite the obviousness of some of these techniques, this component of analytical chemistry is still a considerable portion of error associated with the final concentration.

I.6.1.1 Samples:

Before a procedure of sample preparation can be created (commonly referred to as a Standard Operating Procedure, or SOP), the analyte(s) of interest must be identified as well as the matrix. The most common type of sample matrix for metals is aqueous. The first step when analyzing water samples is to determine whether the total or dissolved metal concentration is of interest. All natural water samples contain particles, some are extremely small while others are visible to the naked eye. Frequently, particle size is used to differentiate between dissolved and suspended solids. For example, in environmental chemistry and other disciplines, most scientists accept that constituents of a sample are dissolved if they pass through a 0.45-?E^m filter; anything not passing through the filter is considered particulate. This will be the distinction between the dissolved and particulate phases used in this text. If the total metal concentration needs to be determined, the water sample may require strong acidification and heating prior to analysis depending on the concentration of metals in the solid phase. If the dissolved solid concentration is desired, the water is first filtered and then acidified, usually with high-purity nitric acid (1-3% acid) in a plastic container. The purpose of the acid in both techniques is to permanently dissolve any metals that are adsorbed to colloidal (very small) particles or the container walls. Some procedures require that samples be stored at 4oC for less than one month prior to analysis.

Other sample matrices, such as gaseous, solid, and biological tissues, are usually chemically converted to aqueous samples through digestions. Metals contained in the atmosphere from geological processes, dust from wind, and high temperature industrial processes are frequently measured to model the fate and transport of metal pollutants. These metals may be in the atomic gaseous state, such as mercury vapor, or associated with suspended particles, such as cadmium or lead ions adsorbed to clay. One common sampling method is to utilize a vacuum to pull large volumes of air (tens to hundreds of cubic meters) through a filter. The filter is then removed and the metals are extracted with acid to dissolve them into an aqueous solution that is then analyzed by FAAS, FAES or ICP. This type of sampling and analysis is commonly preformed in urban areas to monitor atmospheric metal emissions and pollution. Standardized methods for these types of measurements have been developed by the US Environmental Protection Agency (EPA).

The metal concentration in a solid sample or geological material is also frequently analyzed by AAS and ICP. Sometimes it can be analyzed directly by special attachments to AAS and ICP units (as described in Chapters 2 and 3) but are more commonly digested or extracted with acids. The resulting aqueous metal solution is then analyzed as described in Section 6.1.3. However, the specific type of digestion depends on the ultimate goal of the analysis. If adsorbed metal concentrations are of interest, soil/sediment samples are simply placed in acid (usually 1-5 % nitric acid), heated for a specified time, diluted, filtered, and then the filtrate is analyzed as if it were a liquid sample. If the total metal concentration is desired, as in many geological applications, the sample is completely digested with a combination of hydrofluoric, nitric, and perchloric acids; again, the samples are then diluted, filtered, and analyzed as an aqueous sample. Procedures are available from the US EPA and the US Geological Survey (USGS).

Biological tissues present a similar problem as soil and sediment samples. In order to create an aqueous sample the tissue sample is first digested in acid to oxidize all organic matter. This is accomplished with a combination of sulfuric and nitric acids (1-5 % each) and 30 % hydrogen peroxide, after digesting for 24 hours and when necessary, heat. After the tissue has been dissolved, the sample is diluted, filtered, and analyzed as an aqueous sample. Each of the digestion procedures described above dilute the original metal concentration in the sample. Thus, a careful accounting of all dilutions of a sample is required. After a concentration is obtained from an AAS or ICP system, it must be adjusted for the dilution(s) to determine the concentration in the original sample. Calculations concerning these dilutions will be discussed in the final paragraphs of this section.

I.6.1.2 Chemical Reagents:

A variety of chemicals are used in the analysis of metals. Mostly, these include reagents used in the digestion of solid samples or tissues to release metals into an acidic aqueous solution. The majority of these chemical fall into two categories; oxidants and acids. Common digestion reagents include oxidants such as hydrogen peroxide (H2O2) and potassium perchlorate (KClO4) for oxidizing organic matter or tissue. Common acids include hydrochloric acid (HCl), hydrofluoric acid (HF), sulfuric acid (H2SO4), nitric acid (HNO3), and perchloric acids (HClO4). As manufacturers continue to produce commercially available instruments with lower and lower (better) detection limits, the purity of the reagents that are utilized to prepare samples must also improve in order to take advantage of these instrumental advances. Many of the reagents commonly used to prepare samples are commercially available in ultra-pure grades. However, purchasing these ultra-pure reagents is often expensive because of the relatively large volume of the acids or the oxidants needed to oxidize and remove the matrix prior to analysis. In addition, these ultra-pure acids can still contain some metals at the ppt to ppb concentrations, especially for lead and mercury. Some laboratories that analyze large sample volumes choose to prepare their own acids by distilling reagent grade acids. While this may seem like a major inconvenience, the cost savings are obvious when a 2-L bottle of ultra-trace metal nitric acid (double distilled and packed under clean room conditions) currently lists for several hundreds of dollars while a 2.5-L bottle of ACS grade nitric acid costs approximately only tens of dollars. The Teflon distillation apparatus used to produce purer versions of these acids in-house only costs approximately $5000 (US).

While the purity of the chemical reagents is important, the purity of dilution water is also important. Due to the presence of trace metals and salts, the use of tap water is out of the question. House resin-deionized (DI) water may be sufficiently pure for some flame-based methods but may be inadequate for emission and mass spectrometry techniques. For these techniques, house DI is usually passed through a commercially available secondary resin filter system (such as the Nanopure(R) water filtration system from Millipore and Bronstead manufacturers) that removes metal concentrations down to the parts per trillion concentration. If concentrations in samples are to be measured below this level, cleaner water must be obtained. Commonly available resin-based systems cost approximately $5 000-$10 000.

Despite the extensive preparations and costs associated with acquiring pure reagents, there is usually some detectable metal concentration in the reagents. In order to correct for the presence of trace metals, their concentration in a reagent blank must be determined. A reagent blank is a solution that contains every reagent in the digestion and analysis procedure but lacks the actual sample. This blank allows for any metal concentrations present in the reagents (including dilution water) to be accounted for and subtracted from the metal concentrations found in the sample. This process ensures that the final concentration is representative of the analyte?fs presence in the original sample and not from contamination in the added reagents?f.

I.6.1.3 Glassware:

AAS, AES, and ICP instruments yield concentration data with 3-4 significant figures. Thus, quantitative glassware with a similar number of significant figures must be used in order for the instrumental significant figures to accurately represent the concentration of the sample. The most accurate dilutions are achieved with Class A pipettes and volumetric flasks. These pipettes and flasks are almost always made out of glass that can present a problem for trace metal analysis in techniques such as ICP-MS. Trace levels of some metals in water commonly ?gstick?h (adsorb) to glass and some glass containers actually contain metals in their matrix and can release measureable concentrations when acidic solutions are added to them. In order to avoid this source of contamination, plastic or Teflon materials may be required for delivery, digestion, and storage of these samples. The use of manual pipetters with plastic tips, however, results in slightly less precise data (approximately three significant figures), but these devices are rapidly being adopted in the laboratory.

I.6.2 Calibration Techniques

Once a sample has been prepared properly, it is necessary to calibrate the instrument to determine the concentration in the sample. Scientific instruments are number generators, and an improperly working instrument can be a random number generator. These number generators are transformed into valuable analytical tools with the use of calibration techniques. There are various ways to determine the concentration of a sample through the use of external standards, standard addition, or semi-quantitative methods.

I.6.2.1 External Standards:

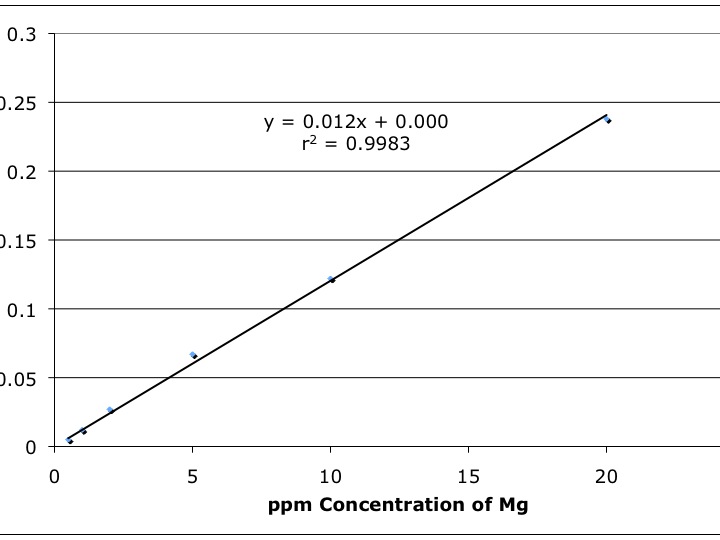

The most common technique to determine the concentration of a sample is through the use of external standards. For example, before a sample of water is analyzed for cadmium (Cd), a series of known Cd concentrations in acidic water ranging from 0.010 ppm to 50.0 ppm would be analyzed. The respective absorbance or emission values for each standard are tabulated and a plot of concentration versus detector signal is made. Next, a plot of detector response (in this case, absorbance or counts per second) is made against the respective metal concentration on the y and x axes respectively. Such a plot is shown in Figure 6-1 where a flame atomic absorption spectrometer was used to measure the concentration of Mg in various external standards.

Figure 6-1. Instrument Response (absorbance) versus Mg Concentration (ppm) by FAAS.

Note the linearity of the data plot; most calibration ?gcurves?h are lines. This linear detector response is preferred since it allows for easier statistical calculations. Once an instrument is calibrated with an external standard, it can be used to estimate the concentration of an analyte in an unknown sample. This is accomplished by performing a linear regression on the data set and obtaining an equation for the calibration line. For Figure 6-1, this equation is y = 0.012x + 0.000 where 0.012 is the slope of the line and 0.000 is the y-intercept. Next the equation is rearranged to solve for x (the reader should do this now). When the actual sample is analyzed on the instrument, the readout will give the absorbance of the sample that corresponds to the value of y in the linear regression. For example, if an unknown sample yields an absorbance reading of 0.062, the Mg concentration would be estimated at 5.03 ppm with the rearranged linear regression equation (the reader should perform this calculation now). Another important statistical calculation is determined by r2 (Figure 6-1) and is a measure of the ?ggoodness of fit?h between the linear model and the experimental data. The closer to 1.000 the r2 value, the more the linear model for the data approaches a perfect fit.

Some calibration lines do not automatically intersect at the origin (x=0,y=0) because of the presence of small concentrations of analyte in the blank sample or because of ?gunbalanced?h electronics (the detector not reading zero absorbance for the blank due to noise). This is especially true as the analyst approaches the detection limit of the instrument. In the past, the blank absorbance reading was simply manually subtracted from other readings prior to plotting the data. Today, modern instruments that are connected to computers perform many of these tasks automatically. Frequently calibration lines, subtraction of blanks, estimating unknown sample concentrations, and even dilutions can all be calculated or accounted for automatically by the computer. This eliminates the need to re-enter data into another computer and decreases typographical and transposing errors. However, these automatic procedures should be monitored to ensure that accurate data are being generated.

I.6.2.2 Standard Addition:

Another form of calibration, standard addition, can be used to account for matrix effects (such as surface tension or viscosity) or other problems (such as chemical interferences). For example, in FAAS and FAES analysis, the sample is drawn into the nebulizer by a constant vacuum source and if the viscosity of the solutions being analyzed are not all the same, then different flow rates of sample or standard solution will reach the flame and therefore different masses of metal will be measured for each. (This is not a problem in ICP since a pump is used to place sample in the nebulizer.) A sample containing significant amounts of sugar, commonly found in food products, will have a higher viscosity and will move more slowly through the inlet tube than standards that are made up in relatively pure water; hence, the resultant signal obtained from this external standard-based analysis will be inaccurately low for the metal of interest. If the standard addition method described below is used, more accurate metal concentrations for the samples will be obtained.

In standard addition analysis, several equal volume aliquots of sample are added to a volumetric flask (i.e. 15 mL of sample to each 25 mL flask). Increasing concentrations of the metal analyte of interest are added to each flask beginning at zero and increasing to the end of the linear range of the instrument (i.e. one flask will have 0.00 ppm, the next will have 1.00ppm, the next will have 5.00ppm, etc.). Acid is added to each flask to reach a specific percent (usually 1-3 %). Finally, each flask is filled to the 25-mL volumetric line. Thus, each flask has equal volumes of sample, and a linear increase of known analyte added starting at 0.00 ppm in the ?gblank?h flask and ending at the highest analyte concentration. After the samples are analyzed, the concentration is plotted as a function of analyte added. A linear regression (y = mx + b) is performed and the linear equation can be rearranged to solve for x, the sample concentration, as a function of y, the detector response. The concentration of the blank sample (the diluted sample containing no reference standard) is determined by computing the x intercept of the line (where y = 0.00).

I.6.3 Figures of Merit

The individual calibration lines that instruments generate are dependent upon numerous variables such as laboratory technique, instrument components and operating parameters. In order to compare different instruments to one another, three figures of merit have been developed to quantitatively compare different analytical techniques. These are sensitivity, detection limit, and signal to noise ratio (S/N).

Sensitivity refers to the slope of the calibration line. Figure 6-2 shows two calibration lines, one with a steep slope and another with a shallower slope. The determination of which level of sensitivity is best depends on the situation. Calibration line 1 is referred to as being more sensitive since it will allow the analyst to distinguish between smaller differences in concentration (i.e. allows the determination of 30.1 from 30.2 as opposed to 30 versus 40). This type of calibration line would be of interest when high degrees of accuracy are needed. If the screening of samples for gross differences in concentration is required, line 1 would be of little use since it has only a limited dynamic range (small range in analyte concentrations) and its use could require the dilution of samples outside the calibration range. In this case, calibration line 2 would be useful since it covers a larger dynamic range, but again, yields less accuracy (fewer significant figures).

Figure 6-2. Calibration Lines with Differing Sensitivity.

Another related parameter, calibration sensitivity, is mathematically defined as

![]()

where m is the slope of the calibration line from the linear regression. Another form of sensitivity is analytical sensitivity, defined as

![]()

where s.d. is the standard deviation of the slope estimate. A range of sensitivities for the identical analyte are usually possible on the same instrument. High sensitivity is accomplished by ?gtuning?h an instrument to its maximum sensitivity before testing the external standards. A lower sensitivity can be accomplished by ?gdetuning?h the instrument to obtain a lower slope and a broader range of useful analyte concentrations. The term sensitivity is commonly, but incorrectly, interchanged with detection limit.

Another figure of merit is the detection limit; this is one of the most important ways to compare two analytical techniques and brands of instruments. The detection limit determines the limitations of the instrument or technique and is commonly and incorrectly referred to as ?gzero?h concentration of the analyte. For example, it is often stated that ?gno?h pollutant was found in a sample. What does ?gno?h mean? Absolute zero? Probably not. Zero concentration does not typically mean that no analyte exist since there is almost always a few atoms or molecules of any substance in any sample. Most people think the air they breath is ?gfree?h of hazardous chemicals; few know it, but the air that they just took into their lungs contains measurable concentrations of PCBs and Hg. However, these concentrations are only measurable under extremely difficult analytical conditions. Instead, it would be more accurate to report the detection limit of the technique and indicate that the sample was below that limit (for example, the sample has less than 26 ppt of pollutant). So, how does an analyst quantitatively determine the detection limit? To illustrate this problem, Figure 6-3 shows three signal responses (these can be measures of absorbance or counts per second). Which of these signals can we confidently say is attributed to the analyte of interest instead of random background noise?

Figure 6-3. Illustration of Signal to Noise and Instrument Responses for a Sample.

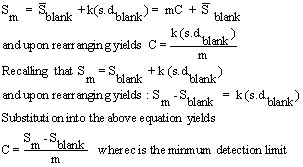

Most everyone would agree that the right-hand sample signal would be attributed to the analyte. Some would also agree with the middle figure, but fewer analysts, if any, would call the left-hand spike the analyte. A quantitative way of distinguishing noise from a sample signal has been developed using the results from the linear regression process described earlier. In order to complete this process, a range of calibration standards is analyzed on an instrument with blank samples being run before, during, and after the standards. The responses for five to ten blanks are averaged and a standard deviation is calculated. Next, a linear regression is completed for the results of the external calibration data. This data is then utilized to determine the detection limit by using the following equations.

First, the minimum signal that can be distinguished from the background is determined by defining a constant k, usually equal to three (3.00?c, a defined number), in the equation

![]()

where Sm is the minimum signal discernable from the noise, S-barBlankis the average blank signal, and s.d.blank is the standard deviation of the blank measurements. The k value of 3 is common across most disciplines and basically means that a signal can be attributed to the analyte if it has a value that is larger than the noise (blank concentration) plus three times the standard deviation.

Next, recall the calibration line equation

![]()

Redefining S to Sm and b to (to be consistent with most statistics textbooks) yields

This method gives a quantitative and consistent way of determining the detection limit once a value of k is determined (again, usually 3.00).

The final figure of merit quantifies the noise in a system by calculating the signal to noise ratio. Sources of noise are commonly divided into environmental, chemical, and instrumental sources that were discussed in the detector section of the FAAS chapter (2) and are relevant to almost all detector systems.

The signal to noise ratio (S/N) is determined by

![]()

where Sx and Nx are the signal and noise readings for a specific setting and n is the number of replicate measurements. One of the most common noise reduction techniques is to take as many readings as are reasonably possible. The more replicate readings a procedure utilizes the greater the decrease of S/N, by the square root of the number of measurements. By taking two measurements, one can increase the S/N by a factor of 1.4; or by taking four measurements the analyst can cut the noise in half.

For the topics covered in this Etextbook, AAS and ICP-AES instruments generally allow for multiple measurements or for an average measurement, for example over 5 -10 seconds, to be taken. For ICP-MS, again, multiple measurements are usually taken, usually 3-5 per mass/charge value. And of course, the analyst can analyze a sample multiple times given the common presence of automatic samplers in the modern laboratory.

These statistical calculations, since they are dependent upon the linear calibration curve, can only be accurately applied over the range of measured external standards. This is because of the fact that detectors do not always give linear responses at relatively low or relatively high concentrations. As a result, all sample signals must be within the range of signals contained in the calibration line. This is referred to as ?gbracketing.?h If sample signals (and therefore concentrations) are too high, the samples are diluted and re-analyzed. If the samples are too low, they are concentrated by a variety of techniques or they are reported to be below the minimum detection limit. In most cases, the limit of quantification (LOQ) is at the lowest reference standard. The limit of linearity (LOL) is where the calibration line becomes non-linear, at the upper and lower ends of the line. In a case where the sample concentration is below the lowest standard but above the calculated detection limit, it is acceptable to record that sample as containing "trace concentrations" for the analyte but usually no actual number is quoted.

Technically, a fourth figure of merit, selectivity, exists but this is more obvious. Selectivity refers to the ability of a technique or instrument to distinguish between two different analytes (i.e. calcium versus magnesium or 12C versus 13C). For example, atomic absorption spectrometry (AAS) and inductively coupled plasma-optical emission spectrometry (ICP-AES) can distinguish (are selective) between calcium and magnesium but not different isotopes of the same element. Inductively coupled plasma-mass spectrometry (ICP-MS) can be selective for isotopes and elements depending on the mass resolution of the instrument.

I.6.4 Calculating Analyte Concentrations in the Original Sample

Dilutions and digestions are routine practice in the analysis of metal compounds. Once the concentration of the aqueous sample is prepared, an interesting problem arises, ?gHow does the analysis relate the concentration in a diluted sample to the concentration in the original sample??h In general, this is accomplished by carefully performing and recording all steps of the sample preparation. A more detailed explanation of the calculation is offered in the following example problem:

Problem Statement:

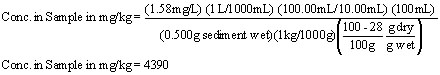

An analysis takes 0.500 grams of wet sediment and prepares it for cadmium (Cd) analysis by FAAS. The purpose of the analysis is to determine the concentration of Cd adsorbed to the sediment on a dry weight basis. The water content in the wet sample was gravimetrically determined to be 24.8%. The wet sample is then measured out and digested in 25-mL of DI water and 3.00-mL of ultra-pure nitric acid on a hot plate at 80oC for one hour. The sample is then cooled and filtered through a 0.45-?E^m filter into a 100-mL volumetric flask that is then filled to the mark with DI. Previous analysis indicated that the sample needed to be diluted a factor of ten to be within the linear concentration range of the instrument. The diluted sample was analyzed on an instrument and a concentration of 1.58 mg/L was measured for the aqueous sample. What is the concentration of Cd in the original, dry weight sample?

First, the data are tabulated.

| Operation | Raw Value of the Operation | Error Associated with each Operation (plus or minus) |

|---|---|---|

| Weighing (g) | 0.500 |

0.002 |

| Dilution I (mL) | 100.00 |

0.08 |

| Pippeting (mL) | 10.00 |

0.02 |

| Dilution II (mL) | 100.00 |

0.08 |

| Water Content (%) | 24.8 |

0.5 |

| Conc. Estimate from Linear Regression (mg/L) | 1.58 |

0.06 |

Next, the analyte concentration measured by the instrument are back-calculated to the concentration in the original sample. Note the accounting of each of the measurements in this calculation.

| Frank's Homepage |

©Dunnivant & Ginsbach, 2008